NVIDIA CUDA is a platform that is commonly utilized for purposes such as intelligence (AI) deep learning applications and scientific computations. Its resources and assistance have positioned it as the preferred option for developers in the field. AMD graphics. Nvidia’s CUDA technology. AMD graphics cards cannot directly utilize CUDA since it is exclusive to NVIDIA GPUs resulting in increased complexity when attempting CUDA-based tasks, with AMD hardware without modifications.

Recent Progressions

- Developers now have choices as new tools allow CUDA programs to operate on AMD GPUs.

AMDs. Remedies

- AMD developed the HIP tool to enable the conversion of CUDA code and positioned ROCM as a platform for high-performance computing in competition with NVIDIA.

AMD’s Alternatives to NVIDIA CUDA

Exploring GPU Computing Technologies

- OpenCL, also known as Open Computing Language, is a source-and-compatible computing framework for different platforms.

Benefits

- Runs on types of processors such as CPUs and GPUs.

- Works well with a variety of hardware systems.

Challenges

- Using and programming this can be not very easy.

- It can be challenging to tailor optimization, for hardware configurations.

- Ideal Applications; Perfect, for projects that require compatibility or diverse systems.

- HIP (which stands for Heterogeneous compute Interface, for Portability)

- An API has been developed to assist in converting CUDA code for use on AMD GPUs.

Pros

- It simplifies the process of shifting CUDA-based projects to AMD GPUs.

- Reduces the need to modify existing CUDA code.

Downsides

- Some aspects of CUDA functionality may not be completely transferred over.

- Great, for developers looking to transition their CUDA projects to AMD platforms.

- ROC M, also known as Radeon Open Compute.

- An open-source platform that prioritizes high-performance computing specifically designed for AMD GPUs.

Benefits

- Designed to work on AMD devices.

- Intense attention, toward the utilization of machine learning, in applications.

Challenges

- Not as. As commonly backed as CUDA.

Ideal Applications

- Perfect, for conducting machine learning and high-performance computing (HPC) activities using AMD GPUs.

Key Takeaways

Main Highlights

- Conversion. Compatibility layers such as HIP and cross-platform support help run CUDA programs designed for NVIDIA GPUs on AMD GPUs, effectively connecting the two ecosystems.

- AMD ROC platform offers backing, for high-performance computing and the field of intelligence and machine learning which enhances the versatility of AMD GPUs for a wide range of tasks.

- The progress of software development is narrowing the gap, between NVIDIA and AMD hardware options, for developers’ GPU preferences.

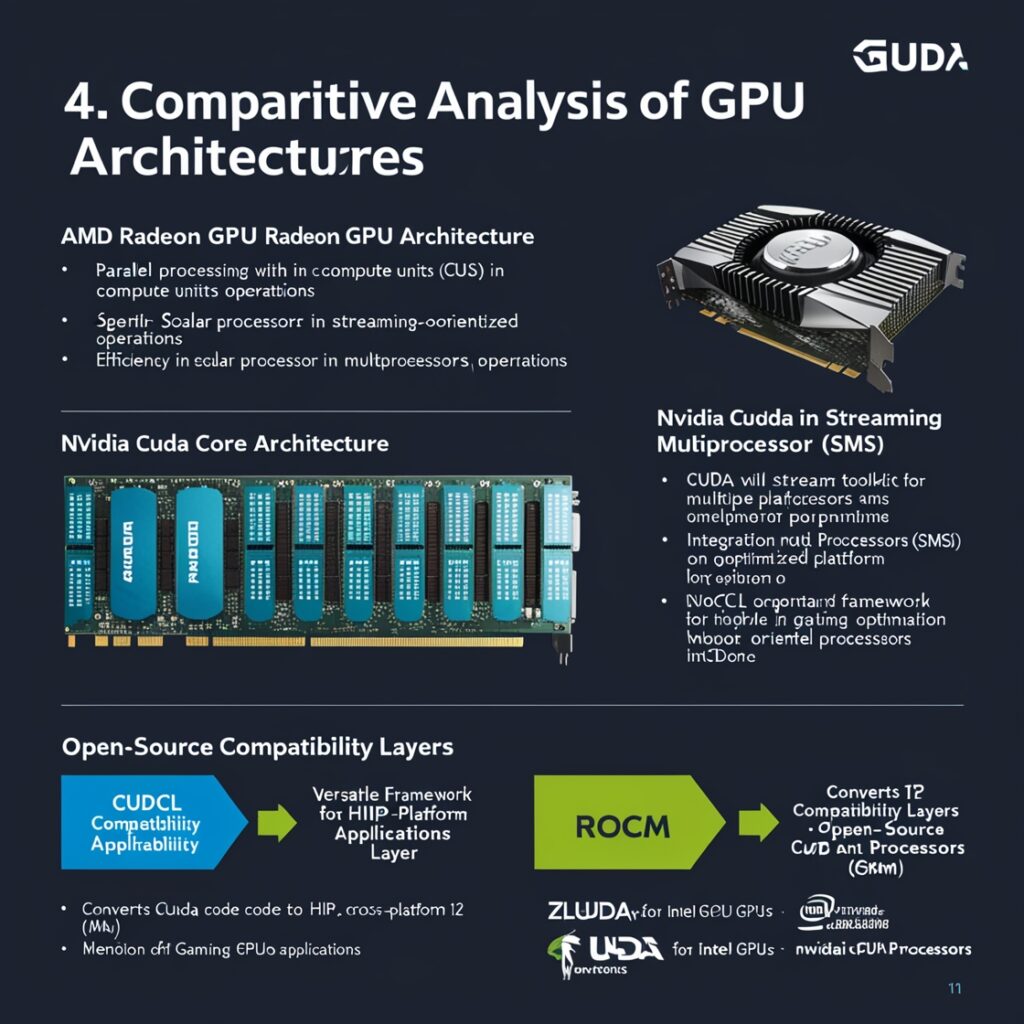

Comparative Analysis of GPU Architectures

The structure of AMD Radeon graphics processing unit (GPU) design

- In its design, it depends on stream processors that are organized into Compute Units (known as CUs) for handling data in parallel.

- Advantages include its proficiency in vector-based tasks such as simulations and intricate graphic rendering work.

- The design of NVIDIAs CUDA Core architecture.

- The design incorporates processors in Streaming Multiprocessors (SMs) allowing for parallelism.

- Optimized using the CUDA Toolkit with libraries and tools designed for high-performance tasks such, as intelligence and deep learning applications.

- Open source compatibility layers, for software applications.

- Let’s talk about OpenCL.

- An adaptable structure that functions on processing units like CPUs and GPUs as well as different platforms—a great fit, for diverse systems.

AMDs ROCK platform

- Transforming CUDA code into HIP allows it to be compatible with AMD GPUs, for platform functionality.

- I’m not sure what “sludge” means. Can you provide context? Clarify your message.

- Allows CUDA applications to run on Intel GPUs to broaden the range of GPU compatibility choices.

- Direct X 12.

- An in-demand platform used for gaming and graphics rendering that offers capabilities for boosting GPU performance in programs.

This is where ZLUDA, an open-source CUDA port for AMD’s ROCm platform, comes into play. This is a translation layer that enables the software to communicate with the GPU as though it were a CUDA device rather than a complete rewriting of CUDA.

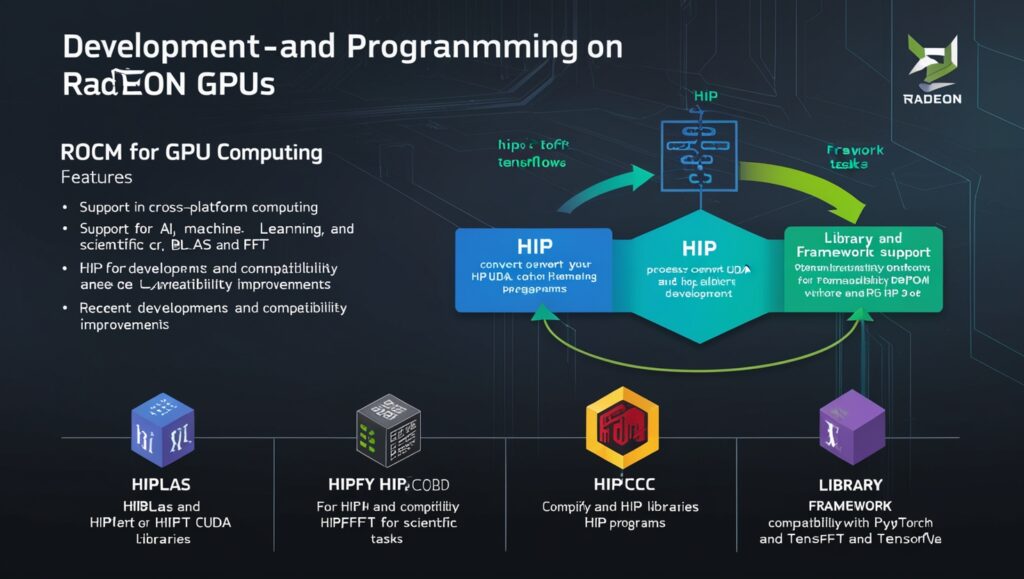

Development and Programming on Radeon GPUs

Harness the power of ROCK, for GPU computing

- Essential Characteristics

- Crafted to excel in tasks such as high-performance computing and the realms of intelligence and machine learning.

- Efficient computations are made possible with the support of libraries, like BLAS (Basic Linear Algebra Subprograms ) and FFT (Fast Fourier Transform).

- Recent Advancements

- Enhanced compatibility and performance have positioned ROCM as an option for workloads.

- Improved assistance, for developing applications that work across platforms and are compatible with used frameworks.

- Developing applications, for platforms

- Transition Procedure

- Transforms CUDA code, to HIP code for compatibility, with AMD GPUs.

Offers resources such as

- Automates the process of converting CUDA source code into Shopify.

- hippify is a tool designed for running HIP programs on AMD hardware.

- Support, for libraries and frameworks

- ROC Programming libraries

- hip FFT provides effective calculations for Fourier transforms.

- Framework Suitability

- It is designed to work with known machine learning platforms such as PY Torch and Tensor Flow to easily fit into your current processes.

FAQS

What does AMD ROCM platform entail and what kind of tools does it offer for high-performance computing purposes?

AMDs ROCM (also known as Radeon Open Compute) is a platform created for high-performance computing that offers resources, like HIP ( for Heterogeneous Compute Interface for Portability) along, with tailored libraries catering to intelligence (AI) machine learning tasks and scientific applications.

How does the maturity and optimization of ROCM stack up, against NVIDIA CUDA technology?

ROC (Radeon Open Compute) is not as developed as NVIDIAs CUDA technology at the moment. Even though ROC supports AMD GPUs and has a way to convert CUDA code using HIP (Heterogeneous compute Interface, for Portability) CUDA stands out with its top-notch optimization and broad support system that make it an effective and dependable choice, for AI and machine learning projects.

Is PyTorch able to work with AMD GPUs? How does it stack up in terms of performance when compared to NVIDIA CUDA GPUs?

PyTorch has the capability to function on AMD GPUs with ROCM technology. May not deliver the level of efficiency as seen on NVIDIA CUDA GPUs due, to the higher level of optimization and support present, in the CUDA environment.

What difficulties arise when utilizing OpenCL within the AMD framework as opposed to ROCM or CUDA?

OpenCL offers computing assistance, for AMD hardware but is harder to optimize than ROC and CUDA alternatives; ROC features integration and tools tailored for AMD GPUs while CUDA is well-optimized for NVIDIA hardware.